What are virtual machines (VMs)?

As server processing power and capacity increased, bare metal applications weren’t able to utilize the new abundance in resources. Thus VMs were born, designed by running software on top of physical servers in order to emulate a particular hardware system. A hypervisor, or a virtual machine monitor, is software, firmware, or hardware that creates and runs VMs. It’s what sits between the OS and hardware and is necessary to virtualize the server.

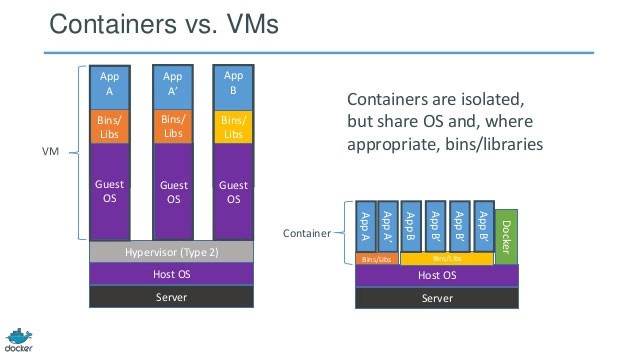

Within each virtual machine runs a unique operating system. VMs with different operating systems can be run on the same physical server – a Unix VM can sit alongside a Linux-based VM, etc. Each VM has its own binaries/libraries and application(s) that it services, and the VM may be many gigabytes large.

Server virtualization provided a variety of benefits, one of the biggest being the ability to consolidate applications onto a single system. Gone were the days of single application/single server, and virtualization ushered in cost savings through reduced footprint, faster server provisioning, and improved disaster recovery (because the DR site hardware no longer had to mirror the primary data center). Development also benefitted from this physical consolidation because greater utilization on larger, faster servers freed up subsequently unused servers to be repurposed for QA, development, or lab gear.

What are containers?

Operating system (OS) virtualization has grown in popularity over the last decade as a means to enable software to run predictably and well when moved from one server environment to another. Containers provide a way to run these isolated systems on a single server/host OS.

Containers sit on top of a physical server and its host OS, e.g. Linux or Windows. Each container shares the host OS kernel and, usually, the binaries and libraries, too. Shared components are read-only, with each container able to be written to through a unique mount. This makes containers exceptionally “light” – containers are only megabytes in size and take just seconds to start, versus minutes for a VM.

The benefits of containers often derive from their speed and lightweight nature; many more containers can be put onto a server than onto a traditional VM. Containers are “shareable” and can be used on a variety of public and private cloud deployments, accelerating dev and test by quickly packaging applications along with their dependencies. Additionally, containers reduce management overhead. Because they share a common operating system, only a single operating system needs care and feeding (bug fixes, patches, etc). This concept is similar to what we experience with hypervisor hosts; fewer management points but slightly higher fault domain. Also, you cannot run a container with a guest operating system that differs from the host OS because of the shared kernel – no Windows containers sitting on a Linux-based host

Containers vs VMs: Which is better in the Data Center?

The determination of which is better in Containers vs VMs is dependent on what you are trying to accomplish. Virtualization enables workloads to be run in environments that are separated from their underlying hardware by a layer of abstraction. This abstraction allows servers to be broken up into virtualized machines (VMs) that can run different operating systems.

Container technology offers an alternative method for virtualization, in which a single operating system on a host can run many different applications from the cloud. One way to think of containers vs VMs is that while VMs run several different operating systems on one compute node, container technology offers the opportunity to virtualize the operating system itself.

Containers vs VMs: Virtual Machine Workloads

A VM is a software-based environment geared to simulate a hardware-based environment, for the sake of the applications it will host. Conventional applications are designed to be managed by an operating system and executed by a set of processor cores. Such applications can run within a VM without any re-architecture.

With VMs, a software component called a hypervisor acts as an agent between the VM environment and the underlying hardware, providing the necessary layer of abstraction. A hypervisor, such as VMware ESXi, is responsible for executing the virtual machine assigned to it and can execute several simultaneously. Other popular hypervisors include KVM, Citrix Xen, and Microsoft Hyper-V. In the most recent VM environments, modern processors are capable of interacting with hypervisors directly, providing them with channels for pipelining instructions from the VM in a manner that is completely opaque to the applications running inside the VM. They also include sophisticated network virtualization models such as VMware NSX.

The scalability of a VM server workload is achieved in much the same way it is achieved on bare metal: With a Web server or a database server, the programs responsible for delivering service are distributed among multiple hosts. Load balancers are inserted in front of those hosts to direct traffic among them equably. Automated procedures within VM environments make such load balancing processes sensitive to changes in traffic patterns across data centers.

Containers vs VMs: Container-Driven Workloads

The concept of containerization was originally developed, not as an alternative to VM environments, but as a way to segregate namespaces in a Linux operating system for security purposes. The first Linux environments, resembling modern container systems, produced partitions (sometimes called “jails”) within which applications of questionable security or authenticity could be executed without risk to the kernel. The kernel was still responsible for execution, though a layer of abstraction was inserted between the kernel and the workload.

Workloads within containers such as Docker are virtualized. However, within Docker’s native environment, there is no hypervisor. Instead, the Linux kernel (or, more recently, the Windows Server kernel) is supplemented by a daemon that maintains the compartmentalization between containers, while connecting their workloads to the kernel. Modern containers often do include minimalized operating systems such as CoreOS and VMware’s Photon OS – their only purpose is to maintain basic, local services for the programs they host, not to project the image of a complete processor space.

Conclusion

VMs and Containers differ on quite a few dimensions, but primarily because containers provide a way to virtualize an OS in order for multiple workloads to run on a single OS instance, whereas with VMs, the hardware is being virtualized to run multiple OS instances. Containers’ speed, agility and portability make them yet another tool to help streamline software development.